[ad_1]

For ML practitioners, the pure expectation is {that a} new ML mannequin that reveals promising outcomes offline can even achieve manufacturing. However usually, that’s not the case. ML fashions that outperform on take a look at knowledge can underperform for actual manufacturing customers. This discrepancy between offline and on-line metrics is commonly an enormous problem in utilized machine studying.

On this article, we are going to discover what each on-line and offline metrics actually measure, why they differ, and the way ML groups can construct fashions that may carry out nicely each on-line and offline.

The Consolation of Offline Metrics

Offline Mannequin analysis is the primary checkpoint for any mannequin in deployment. Coaching knowledge is often cut up into practice units and validation/take a look at units, and analysis outcomes are calculated on the latter. The metrics used for analysis might differ based mostly on mannequin kind: A classification mannequin often makes use of precision, recall, AUC, and so on, A recommender system makes use of NDCG, MAP, whereas a forecasting mannequin makes use of RMSE, MAE, MAPE, and so on.

Offline analysis makes speedy iteration potential as you may run a number of mannequin evaluations per day, examine their outcomes, and get fast suggestions. However they’ve limits. Analysis outcomes closely depend upon the dataset you select. If the dataset doesn’t characterize manufacturing site visitors, you will get a false sense of confidence. Offline analysis additionally ignores on-line elements like latency, backend limitations, and dynamic person conduct.

The Actuality Verify of On-line Metrics

On-line metrics, in contrast, are measured in a stay manufacturing setting by way of A/B testing or stay monitoring. These metrics are those that matter to the enterprise. For recommender programs, it may be funnel charges like Click on-through charge (CTR) and Conversion Charge (CVR), or retention. For a forecasting mannequin, it will probably convey value financial savings, a discount in out-of-stock occasions, and so on.

The apparent problem with on-line experiments is that they’re costly. Every A/B take a look at consumes experiment site visitors that might have gone to a different experiment. Outcomes take days, typically even weeks, to stabilize. On prime of that, on-line alerts can typically be noisy, i.e., impacted by seasonality, holidays, which might imply extra knowledge science bandwidth to isolate the mannequin’s true impact.

| Metric Sort | Execs & Cons |

| Offline Metrics, eg: AUC, Accuracy, RMSE, MAPE |

Execs: Quick, Repeatable, and low cost Cons: Doesn’t replicate the true world |

| On-line Metrics, eg: CTR, Retention, Income |

Execs: True Enterprise influence reflecting the true world Cons: Costly, sluggish, and noisy (impacted by exterior elements) |

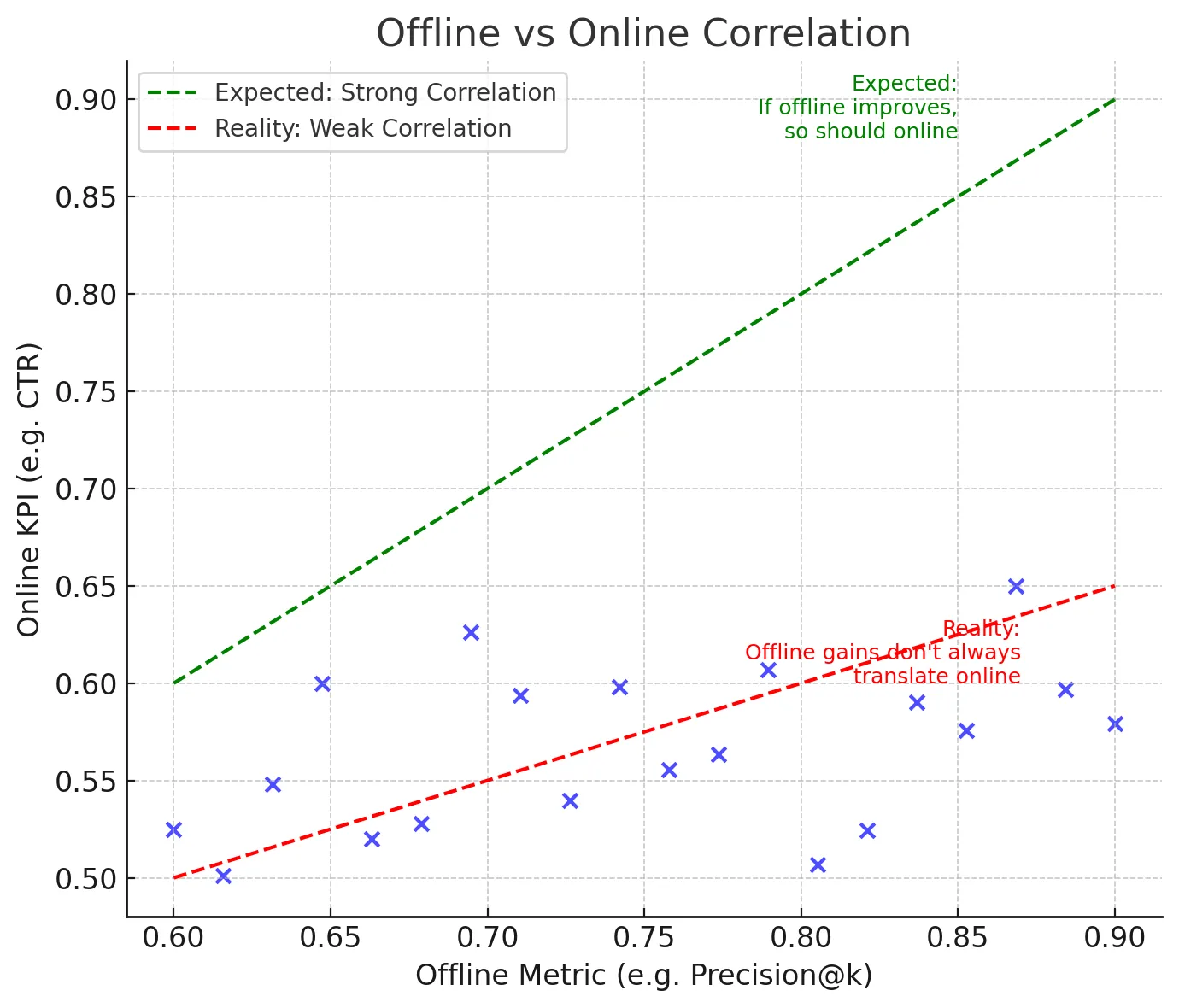

The On-line-Offline Disconnect

So why do fashions that shine offline stumble on-line? Firstly, person conduct may be very dynamic, and fashions skilled prior to now might not have the ability to sustain with the present person calls for. A easy instance for it is a recommender system skilled in Winter might not have the ability to present the correct suggestions come summer time since person preferences have modified. Secondly, suggestions loops play a pivotal half within the online-offline discrepancy. Experimenting with a mannequin in manufacturing adjustments what customers see, which in flip adjustments their conduct, which impacts the info that you just acquire. This recursive loop doesn’t exist in offline testing.

Offline metrics are thought of proxies for on-line metrics. However usually they don’t line up with real-world objectives. For Instance, Root Imply Squared Error ( RMSE ) minimises general error however can nonetheless fail to seize excessive peaks and troughs that matter lots in provide chain planning. Secondly, app latency and different elements can even influence person expertise, which in flip would have an effect on enterprise metrics.

Bridging the Hole

The excellent news is that there are methods to scale back the online-offline discrepancy drawback.

- Select higher proxies: Select a number of proxy metrics that may approximate enterprise outcomes as an alternative of overindexing on one metric. For instance, a recommender system would possibly mix precision@okay with different elements like range. A forecasting mannequin would possibly consider stockout discount and different enterprise metrics on prime of RMSE.

- Examine correlations: Utilizing previous experiments, we are able to analyze which offline metrics correlated with on-line profitable outcomes. Some offline metrics might be constantly higher than others in predicting on-line success. Documenting these findings and utilizing these metrics will assist the entire workforce know which offline metrics they’ll depend on.

- Simulate interactions: There are some strategies in suggestion programs, like bandit simulators, that replay person historic logs and estimate what would have occurred if a special rating had been proven. Counterfactual analysis can even assist approximate on-line conduct utilizing offline knowledge. Strategies like these may help slim the online-offline hole.

- Monitor after deployment: Regardless of profitable A/B assessments, fashions drift as person conduct evolves ( just like the winter and summer time instance above ). So it’s all the time most popular to observe each enter knowledge and output KPIs to make sure that the discrepancy doesn’t silently reopen.

Sensible Instance

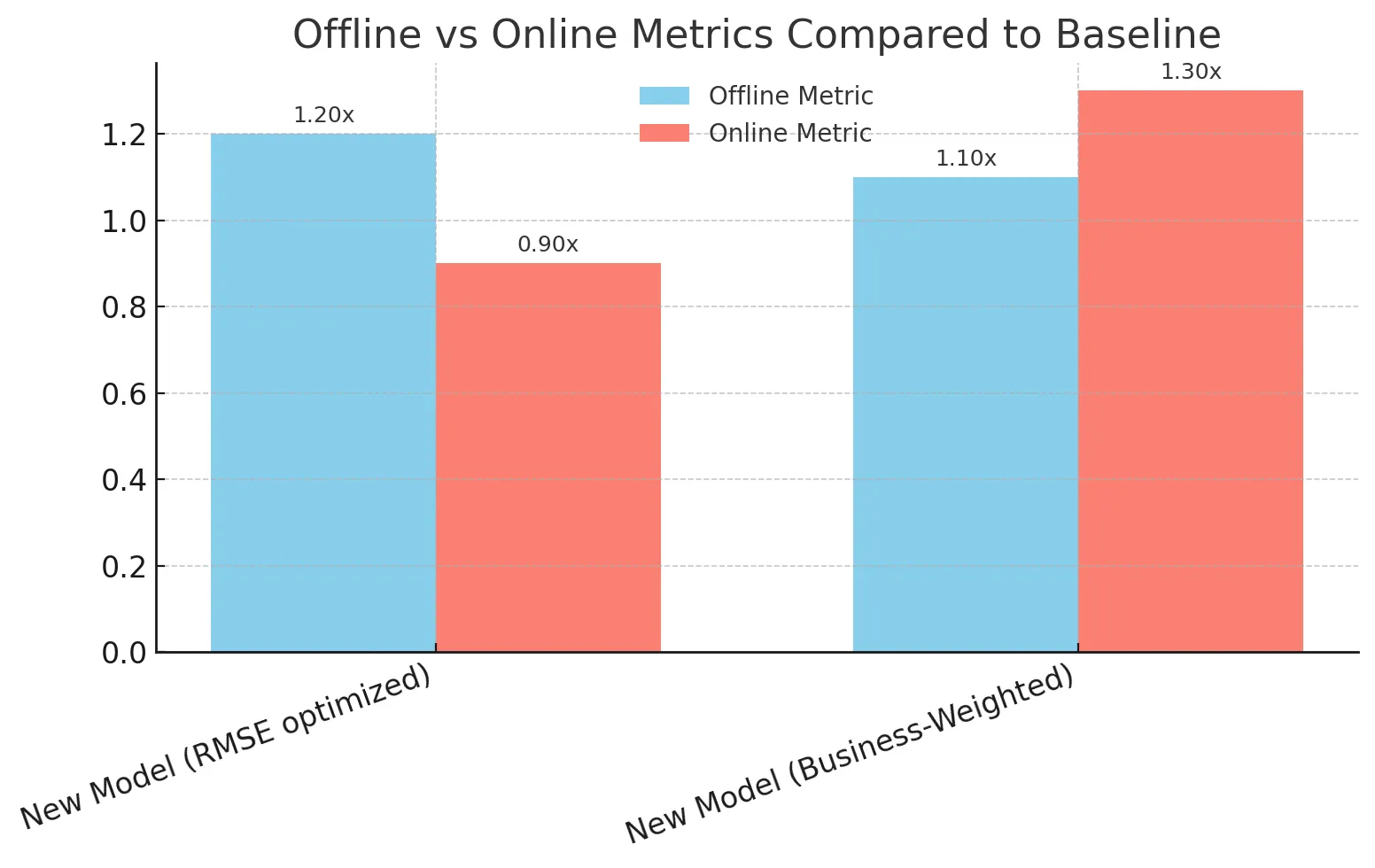

Think about a retailer deploying a brand new demand forecasting mannequin. The mannequin confirmed nice promising outcomes offline (in RMSE and MAPE), which made the workforce very excited. However when examined on-line, the enterprise noticed minimal enhancements and in some metrics, issues even regarded worse than baseline.

The issue was proxy misalignment. In provide chain planning, underpredicting demand for a trending product causes misplaced gross sales, whereas overpredicting demand for a slow-moving product results in wasted stock. The offline metric RMSE handled each as equals, however real-world prices had been removed from being symmetric.

The workforce decided to redefine their analysis framework. As a substitute of solely counting on RMSE, they outlined a customized business-weighted metric that penalized underprediction extra closely for trending merchandise and explicitly tracked stockouts. With this modification, the following mannequin iteration supplied each robust offline outcomes and on-line income features.

Closing ideas

Offline metrics are just like the rehearsals to a dance follow: You may study rapidly, take a look at concepts, and fail in a small, managed setting. On-line metrics are like thes precise dance efficiency: They measure precise viewers reactions and whether or not your adjustments ship true enterprise worth. Neither alone is sufficient.

The actual problem lies to find the most effective offline analysis frameworks and metrics that may predict on-line success. When executed nicely, groups can experiment and innovate sooner, decrease wasted A/B assessments, and construct higher ML programs that carry out nicely each offline and on-line.

Incessantly Requested Questions

A. As a result of offline metrics don’t seize dynamic person conduct, suggestions loops, latency, and real-world prices that on-line metrics measure.

A. They’re quick, low cost, and repeatable, making fast iteration potential throughout improvement.

A. They replicate true enterprise influence like CTR, retention, or income in stay settings.

A. By selecting higher proxy metrics, learning correlations, simulating interactions, and monitoring fashions after deployment.

A. Sure, groups can design business-weighted metrics that penalize errors in another way to replicate real-world prices.

Login to proceed studying and luxuriate in expert-curated content material.

[ad_2]

Supply hyperlink